AI is no longer a side experiment:it has become operational infrastructure. Budgets are measurable, executive dashboards include AI KPIs, and every business unit wants a piece of the promise. But moving from potential to production comes with new friction.

The technology itself isn’t the main challenge anymore. The real cost appears when leaders must make AI consistent: reliable enough to trust, governed enough to scale, and efficient enough to justify. Each of these requires not just technical architecture, but organizational discipline, and that’s where most strategies falter.

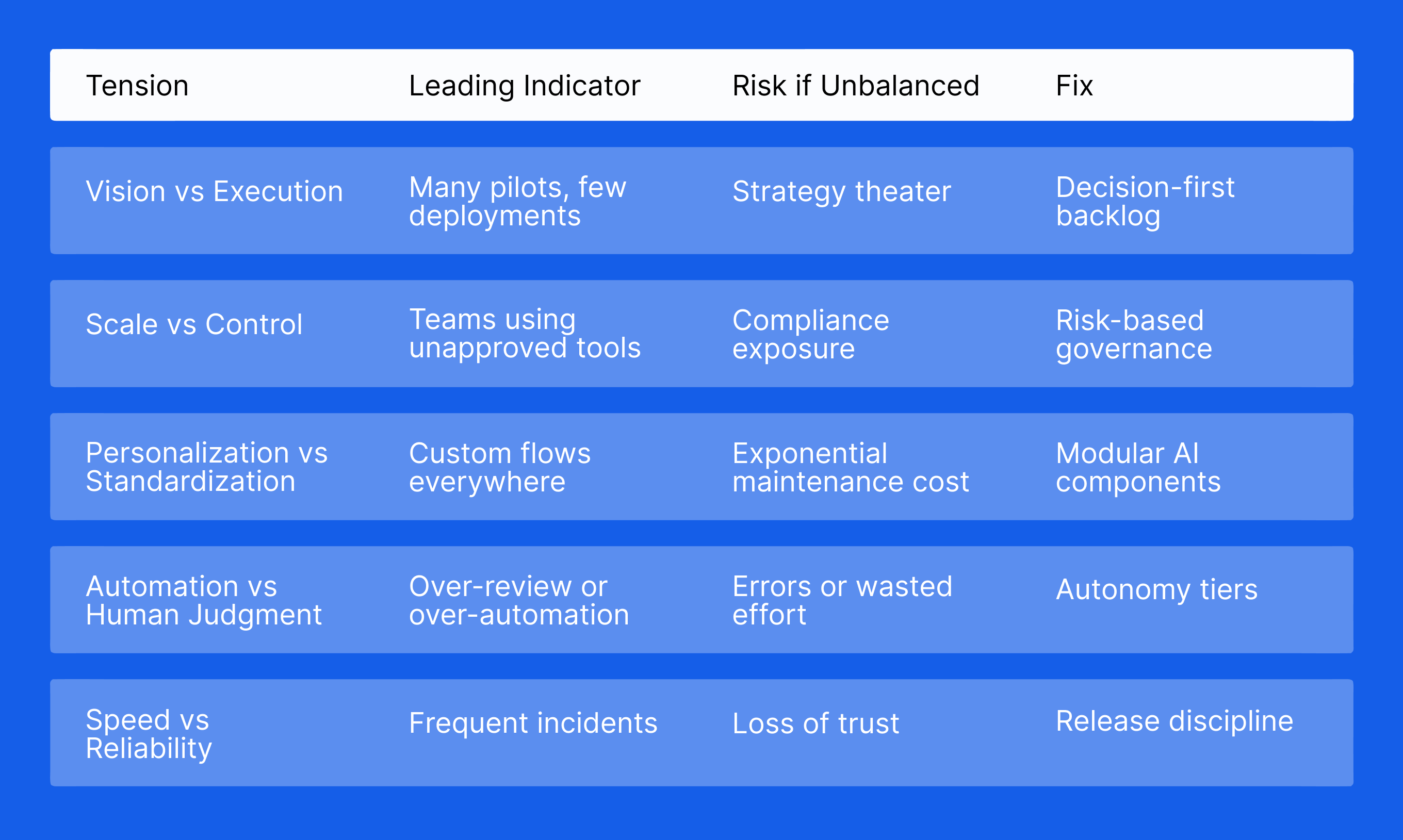

Across industries, five recurring tensions define the state of AI maturity in 2025. They’re not roadblocks but design constraints: predictable trade-offs that every organization must navigate.

Recognizing them early doesn’t make AI easy, but it makes progress repeatable, and reversals less costly.

1. Vision vs. execution: The widening gap between strategy decks and real deployments

Every enterprise now has an AI vision statement: augment teams, automate processes, personalize experiences, reduce risk.

Yet few can show working systems thatdeliver those outcomes. The gap between strategy decks and deployed products has become one of the defining issues of AI adoption.

Where most strategies fail

- No single decision owner. Product teams define features, IT owns infrastructure, Risk defines controls, but no one owns the end-to-end decision the AI is supposed to improve.

- Pilot paralysis. Dozens of proofs of concept remain stuck because success criteria were never defined in measurable, operational terms.

- Data wish-casting. Strategies depend on an idealized future dataset (clean, labeled, unified) rather than the messy, partial data that actually exists today.

What disciplined execution looks like

- Define the decision, not themodel. “Approve or reject a supplier in under two hours at less than 1% error” is a decision. “Deploy anomaly detection” is a feature. One drives business value; the other drives activity.

- Write acceptance tests before the model. Establish latency ceilings, accuracy thresholds by segment, escalation rules, and rollback triggers. Treat these like functional specs, not after thoughts.

- Adopt an AI release cadence. Ship in small, measurable increments. For example, reduce support handling time by 15% in a single workflow before expanding scope. Small wins compound faster than ambitious pilots that never ship.

Signals that vision and execution are finally aligned

- You maintain a living backlog of decisions, each with a clear owner, data source, and performance metric.

- Model cards and runbooks exist before the first production call is made, not after something breaks.

- You can disable a model without disrupting the business process, meaning automation is structured, not brittle.

2. Scale vs. control: When innovation outpaces governance

As AI spreads across departments, what begins as innovation often turns into fragmentation. Teams experiment with models, integrate APIs, and automate workflows faster than internal governance can adapt. What was once a single pilot quickly becomes a distributed ecosystemof models, prompts, and scripts that operate outside any unified control.

For leadership, the challenge isn’tenthusiasm. It's an oversight. How can an organization encourage experimentation without losing track of what is being deployed, where the data comes from, or what rules are being applied?

The risks of uncontrolled scale

- Shadow AI. Teams adopt unapproved tools or plug external APIs into core workflows. Visibility drops, and with it, the ability to manage compliance or data risk.

- Duplicated efforts. Multiple departments solve the same problem differently, using incompatible frameworks that create technical debt.

- Governance lag. Policies are written after deployment instead of being embedded in the development process, leading to a cycle of patching and correction.

What effective control looks like

Control is not about limiting innovation.It is about creating a framework that allows responsible speed. Good governancefeels invisible: it guides action without blocking it.

- Central principles, localexecution. Establish a small set of non-negotiablerules for data privacy, model validation, and vendor usage. Then allow teams to build freely within those boundaries.

- Risk-based oversight. Not every AI system needs the same level of scrutiny. Classify projects by risk: customer-facing versus internal, advisory versus autonomous, sensitive versus low-impact. Apply the appropriate level of testing and review to each.

- Auditability as a default feature. Every prediction, prompt, and action should be traceable. Logs should capture input data, model version, outcome, and any human intervention. This turns compliance from an after thought into an operational habit.

How to operationalize control

- Reusable blueprints. Provide reference architectures for common needs, such as document summarization or predictive scoring, so teams do not reinvent them unsafely.

- Policy integrated in code. Enforce constraints automatically during build and deployment, for example blocking external API calls that violate data residency rules.

- Continuous review loops. Treat AI incidents the same way Site Reliability Engineers handle outages. Hold post-incident reviews, fix the root cause in code or policy, and make the lessons available across teams.

Signs that scale and control are in balance

- Every AI asset can be traced from data source to output, with clear ownership.

- Builders can innovate without waiting for manual approvals because the rules are built into their tools.

- Governance meetings focus on exceptions and insights, not policing routine work.

When innovation and governance evolvetogether, AI stops being a compliance risk and starts functioning as reliable infrastructure.

3. Personalization vs.Standardization: The cost of tailoring AI to every use case

Every department wants AI shaped to “how they work.” But scaling hundreds of custom workflows is unsustainable. The more personalization increases, the more technical debt, drift, and dependency the organization accumulates.

This tension is now visible across enterprise environments—especially in LATAM companies modernizing legacy stacks while integrating AI incrementally.

Where the tension becomes destructive

- Fragmented logic. Teams rewrite similar automations from scratch, producing incompatible rules, agents, or datasets.

- Model sprawl. Several versions of the “same” model exist, each adapted to the quirks of one team.

- Hidden maintenance cost. Personalization accelerates early adoption but multiplies long-term upkeep.

What a sustainable balance looks like

- Modular standardization. Standardize the building blocks, not the final workflow. Letteams assemble custom flows from approved modules.

- Decision templates. Create reusable templates for common decisions—approvals, riskscoring, routing.

- Local customization only at the edge. Allow teams to fine-tune thresholds or businessrules while keeping data flows, validation logic, and guardrails centralized.

How Creai solves this tension in practice

Creai’s approach relies on reusablecomponents (OCR, document summarization, workflow automation, virtualagents, sentiment analysis) that plug into any process but remain governedcentrally .

This preserves speed without sacrificing consistency—critical for organizationswith distributed operations in Mexico, Colombia, Chile, or Spain.

Signs that scale and control are in balance

- Every AI asset can be traced from data source to output, with clear ownership.

- Builders can innovate without waiting for manual approvals because the rules are built into their tools.

- Governance meetings focus on exceptions and insights, not policing routine work.

When innovation and governance evolvetogether, AI stops being a compliance risk and starts functioning as reliable infrastructure.

4. Automation vs. Human Judgment: Where autonomy ends and oversight begins

As AI takes over more operational tasks, leaders must redefine who decides what, under which conditions, and with what level of accountability.

This tension is especially visible in customer service, compliance, and back-office operations.

The consequence of not defining boundaries

- Over-automation. Teams automate decisions that should remain human—like rejecting a supplier or escalating a fraud case.

- Under-automation. Fear of risk forces humans to re-review every AI suggestion, eliminating the productivity gains the AI was built for.

- Ambiguous accountability. When a model makes a mistake, ownership becomes unclear.

What clarity looks like

Defined autonomy tiers.

- Tier 1: Full automation for low-risk, reversible tasks.

- Tier 2: AI suggestions with mandatory human approval.

- Tier 3: Human-in-control for irreversible or high-risk decisions.

Critical exception rules. Systems should escalate when uncertainty is high or data quality drops.

Human-AI collaboration design. Flow diagrams of who validates, when, and with what evidence create predictable accountability.

A LATAM example

A financial services company in Mexico used AI to classify risk documents but required human analysts to validate anomalies. Result: 35% faster processing without compromising compliance—an approach now replicated across several LATAM operations.

5. Speed vs. Reliability: The pressure to deploy fast without breaking trust

Executives want AI delivered yesterday. But rushed deployment introduces instability, data quality issues, bias, and unexpected errors in production.

This tension is the most underestimated—and the most expensive.

What causes reliability to collapse

- Skipping non-functional requirements. Latency ceilings, error budgets, resource limits.

- No golden datasets. Teams train on inconsistent datasets and expectstable outputs.

- Lack of monitoring. Drift goes unnoticed until users complain.

What sustainable speed looks like

- AI release discipline. Weekly or biweekly releases with predefined acceptance criteria.

- Pre-production stress tests. Test on worst-case scenarios, not ideal cases.

- Performance dashboards. Real-time monitoring of accuracy, latency, costs, and drift.

The reliability-speed framework

A simple checklist Creai uses beforepushing any AI system to production:

- Data validated?

- Edge cases tested?

- Rollback path documented?

- Owners identified?

- Logs and traceability enabled?

- Cost ceiling established?

A Practical Framework: The AI Leadership Tension Map

A quick diagnostic tool leaders can useto identify where their organization is stuck:

Conclusion: AI maturity is not technical — it’s architectural

Enterprises that scale AI in 2025 do not win because of better models. They win because of better operational discipline:

- Better decision definitions

- Better guardrails

- Better modularization

- Better ownership

- Better release practices

AI becomes reliable infrastructure when leaders treat it as such. For companies in LATAM and Europe—where legacy systems, regulatory pressure, and talent bottlenecks intersect—the organizations that master these tensions will set the competitive standard for years. If you’re navigating these tensions and want an applied, execution-first partner, Creai helps teams design, build, and operate AI systems with rigor, speed, and measurable impact.

Whether you need to audit your current stack, streamline a critical workflow, or accelerate AI adoption responsibly—our team can support you end-to-end. Get in touch with Creai to explore how we can help you scale AI with clarity, consistency, and real operational results.

FAQ

1. What are the biggest challenges of enterprise AI adoption in 2025?

The main challenges are organizational, not technical: aligning vision with execution, governing AI at scale, balancing personalization with standardization, defining human oversight boundaries, and deploying reliably without sacrificing speed.

2. Why do AI pilots fail to reach production?

Common causes include unclear decision ownership, lack of acceptance criteria, data dependencies based on unrealistic assumptions, and absence of a release cadence.

3. How can companies prevent AI fragmentation across teams?

Use a centralized governance layer with reusable AI components, risk-based oversight, and auditability-by-default. Allow autonomy only within approved guardrails.

4. Should AI automate decisions or only support them?

It depends on risk. Low-impact decisions can be fully automated; high-impact or irreversible ones should remain human-led with AI suggestions.

5. How can enterprises personalize AI without creating technical debt?

Standardize core modules (validation, data flows, prompts, model governance) and allow light customization at the workflow edge.

Similar stories

.png)